Are Your AI Logos Actually Kryptonite?

By Matthew B. Harrison

TALKERS, VP/Associate Publisher

Harrison Media Law, Senior Partner

Goodphone Communications, Executive Producer

Superman just flew into court – not against Lex Luthor, but against Midjourney. Warner Bros. Discovery is suing the AI platform, accusing it of stealing the studio’s crown jewels: Superman, Batman, Wonder Woman, Scooby-Doo, Bugs Bunny, and more.

Superman just flew into court – not against Lex Luthor, but against Midjourney. Warner Bros. Discovery is suing the AI platform, accusing it of stealing the studio’s crown jewels: Superman, Batman, Wonder Woman, Scooby-Doo, Bugs Bunny, and more.

At first glance, you might shrug. “That’s Warner Bros. vs. Silicon Valley – what does it have to do with my talk media show?” Here’s the answer: everything. If you or your producer are using Midjourney, DALL·E, or Stable Diffusion for logos, promos, or podcast cover art, you’re standing in the same blast radius.

AI Isn’t Neutral Paint

The romance of AI graphics is speed and cost. Need a logo in five minutes? A flyer for a station event? A podcast cover? Fire up an AI tool and you’re done.

But those images don’t come from a blank canvas. They come from models trained on copyrighted works – often without permission. Warner Bros. alleges that Midjourney not only trained on its characters but knowingly let users download knockoff versions.

If Warner wins – or even squeezes a settlement – AI platforms will clamp down. Suddenly, the “free” art you’ve been posting may not just vanish; it may become a liability.

Too Small to Matter? Think Again

Here’s the legal catch: infringement claims don’t scale by size. A podcaster with a Facebook page is just as liable as a network if the artwork copies protected content.

It’s easy to imagine a rival, competitor, or ex-producer spotting an AI-made graphic that looks “too much like” something else – and firing off a takedown. Once that happens, you’re judged not by intent but by what you published.

Unlike FCC guardrails for on-air speech, there’s no regulator to clarify. This is civil court. You versus the claimant – and the billable hours start immediately.

Even Elon Musk Just Got Burned

Neuralink – Elon Musk’s brain-computer startup – just lost its bid to trademark the words “Telepathy” and “Telekinesis.” Someone else filed first.

If Musk’s lawyers can’t secure simple branding terms, what chance does your station or company have if you wait until after launch to file your new show name? Timing isn’t just strategy; it’s survival.

The Playbook

- Audit Your AI Use. Know which graphics and promos are AI-generated, and from what platform.

- File Early. Register show names and logos before the launch hype.

- Budget for Ownership. A real designer who assigns you copyright is safer than a bot with murky training data.

The Bottom Line

AI may feel like a shortcut, but in media law it’s a trapdoor. If Warner Bros. will defend Superman from an AI platform, they won’t ignore your podcast artwork if it looks too much like the Man of Steel.

Big or small, broadcaster or podcaster – if your AI Superman looks like theirs, you’re flying straight into Kryptonite.

Matthew B. Harrison is a media and intellectual property attorney who advises radio hosts, content creators, and creative entrepreneurs. He has written extensively on fair use, AI law, and the future of digital rights. Reach him at Matthew@HarrisonMediaLaw.com or read more at TALKERS.com.

Imagine an AI trained on millions of books – and a federal judge saying that’s fair use. That’s exactly what happened this summer in Bartz v. Anthropic, a case now shaping how creators, publishers, and tech giants fight over the limits of copyright.

Imagine an AI trained on millions of books – and a federal judge saying that’s fair use. That’s exactly what happened this summer in Bartz v. Anthropic, a case now shaping how creators, publishers, and tech giants fight over the limits of copyright. Ninety seconds. That’s all it took. One of the interviews on the TALKERS Media Channel – shot, edited, and published by us – appeared elsewhere online, chopped into jumpy cuts, overlaid with AI-generated video game clips, and slapped with a clickbait title. The credit? A link. The essence of the interview? Repurposed for someone else’s traffic.

Ninety seconds. That’s all it took. One of the interviews on the TALKERS Media Channel – shot, edited, and published by us – appeared elsewhere online, chopped into jumpy cuts, overlaid with AI-generated video game clips, and slapped with a clickbait title. The credit? A link. The essence of the interview? Repurposed for someone else’s traffic. Imagine a listener “talking” to an AI version of you – trained entirely on your old episodes. The bot knows your cadence, your phrases, even your voice. It sounds like you, but it isn’t you.

Imagine a listener “talking” to an AI version of you – trained entirely on your old episodes. The bot knows your cadence, your phrases, even your voice. It sounds like you, but it isn’t you.

Imagine SiriusXM acquires the complete Howard Stern archive – every show, interview, and on-air moment. Months later, it debuts “Howard Stern: The AI Sessions,” a series of new segments created with artificial intelligence trained on that archive. The programming is labeled AI-generated, yet the voice, timing, and style sound like Stern himself.

Imagine SiriusXM acquires the complete Howard Stern archive – every show, interview, and on-air moment. Months later, it debuts “Howard Stern: The AI Sessions,” a series of new segments created with artificial intelligence trained on that archive. The programming is labeled AI-generated, yet the voice, timing, and style sound like Stern himself. You did everything right – or so you thought. You used a short clip, added commentary, or reshared something everyone else was already posting. Then one day, a notice shows up in your inbox. A takedown. A demand. A legal-sounding, nasty-toned email claiming copyright infringement, and asking for payment.

You did everything right – or so you thought. You used a short clip, added commentary, or reshared something everyone else was already posting. Then one day, a notice shows up in your inbox. A takedown. A demand. A legal-sounding, nasty-toned email claiming copyright infringement, and asking for payment. Mark Walters

Mark Walters

In radio and podcasting, editing isn’t just technical – it shapes narratives and influences audiences. Whether trimming dead air, tightening a guest’s comment, or pulling a clip for social media, every cut leaves an impression.

In radio and podcasting, editing isn’t just technical – it shapes narratives and influences audiences. Whether trimming dead air, tightening a guest’s comment, or pulling a clip for social media, every cut leaves an impression. Let’s discuss how CBS’s $16 million settlement became a warning shot for every talk host, editor, and content creator with a mic.

Let’s discuss how CBS’s $16 million settlement became a warning shot for every talk host, editor, and content creator with a mic. In early 2024, voters in New Hampshire got strange robocalls. The voice sounded just like President Joe Biden, telling people not to vote in the primary. But it wasn’t him. It was an AI clone of his voice – sent out to confuse voters.

In early 2024, voters in New Hampshire got strange robocalls. The voice sounded just like President Joe Biden, telling people not to vote in the primary. But it wasn’t him. It was an AI clone of his voice – sent out to confuse voters. When Georgia-based nationally syndicated radio personality, and Second Amendment advocate Mark Walters (longtime host of “Armed American Radio”) learned that ChatGPT had falsely claimed he was involved in a criminal embezzlement scheme, he did what few in the media world have dared to do. Walters stood up when others were silent, and took on an incredibly powerful tech company, one of the biggest in the world, in a court of law.

When Georgia-based nationally syndicated radio personality, and Second Amendment advocate Mark Walters (longtime host of “Armed American Radio”) learned that ChatGPT had falsely claimed he was involved in a criminal embezzlement scheme, he did what few in the media world have dared to do. Walters stood up when others were silent, and took on an incredibly powerful tech company, one of the biggest in the world, in a court of law. In a ruling that should catch the attention of every talk host and media creator dabbling in AI, a Georgia court has dismissed “Armed American Radio” syndicated host Mark Walters’ defamation lawsuit against OpenAI. The case revolved around a disturbing but increasingly common glitch: a chatbot “hallucinating” canonically false but believable information.

In a ruling that should catch the attention of every talk host and media creator dabbling in AI, a Georgia court has dismissed “Armed American Radio” syndicated host Mark Walters’ defamation lawsuit against OpenAI. The case revolved around a disturbing but increasingly common glitch: a chatbot “hallucinating” canonically false but believable information.

In the ever-evolving landscape of digital media, creators often walk a fine line between inspiration and infringement. The 2015 case of “Equals Three, LLC v. Jukin Media, Inc.” offers a cautionary tale for anyone producing reaction videos or commentary-based content: fair use is not a free pass, and transformation is key.

In the ever-evolving landscape of digital media, creators often walk a fine line between inspiration and infringement. The 2015 case of “Equals Three, LLC v. Jukin Media, Inc.” offers a cautionary tale for anyone producing reaction videos or commentary-based content: fair use is not a free pass, and transformation is key. As the practice of “clip jockeying” becomes an increasingly ubiquitous and taken-for-granted technique in modern audio and video talk media, an understanding of the legal concept “fair use” is vital to the safety and survival of practitioners and their platforms.

As the practice of “clip jockeying” becomes an increasingly ubiquitous and taken-for-granted technique in modern audio and video talk media, an understanding of the legal concept “fair use” is vital to the safety and survival of practitioners and their platforms.

competition last evening (2/22) at the 1st Circuit Court of Appeals in Boston, MA. The American Bar Association, Law Student Division holds a number of annual national moot court competitions. One such event, the National Appellate Advocacy Competition, emphasizes the development of oral advocacy skills through a realistic appellate advocacy experience with moot court competitors participating in a hypothetical appeal to the United States Supreme Court. This year’s legal question focused on the Communications Decency Act – “Section 230” – and the applications of the exception from liability of internet service providers for the acts of third parties to the realistic scenario of a journalist’s photo/turned meme being used in advertising (CBD, ED treatment, gambling) without permission or compensation in violation of applicable state right of publicity statutes. Harrison tells TALKERS, “We are at one of those sensitive times in history where technology is changing at a quicker pace than the legal system and legislators can keep up with – particularly at the consequential juncture of big tech and mass communications. I was impressed and heartened by the articulateness and grasp of the Section 230 issue displayed by the law students arguing before me.”

competition last evening (2/22) at the 1st Circuit Court of Appeals in Boston, MA. The American Bar Association, Law Student Division holds a number of annual national moot court competitions. One such event, the National Appellate Advocacy Competition, emphasizes the development of oral advocacy skills through a realistic appellate advocacy experience with moot court competitors participating in a hypothetical appeal to the United States Supreme Court. This year’s legal question focused on the Communications Decency Act – “Section 230” – and the applications of the exception from liability of internet service providers for the acts of third parties to the realistic scenario of a journalist’s photo/turned meme being used in advertising (CBD, ED treatment, gambling) without permission or compensation in violation of applicable state right of publicity statutes. Harrison tells TALKERS, “We are at one of those sensitive times in history where technology is changing at a quicker pace than the legal system and legislators can keep up with – particularly at the consequential juncture of big tech and mass communications. I was impressed and heartened by the articulateness and grasp of the Section 230 issue displayed by the law students arguing before me.”

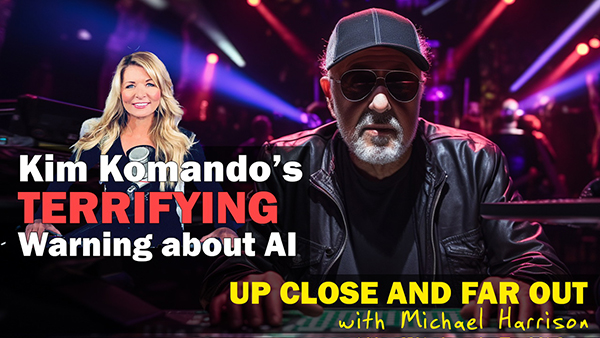

sponsored by Newsmax, was introduced by TALKERS associate publisher and media attorney, Matthew B. Harrison, Esq. (pictured at right) and moderated by TALKERS publisher, Michael Harrison (pictured above). Panelists (pictured below from left to right) include Lee Harris, director of integrated operations, NewsNation; Lee Habeeb, host/producer, “Our American Stories”; Kraig Kitchin, CEO, Sound Mind, LLC/chair, Radio Hall of Fame; Arthur Aidala, Esq., founding partner, Aidala, Bertuna & Kamins, PC/host, AM 970 The Answer, New York; Chad Lopez, president, WABC, New York/Big Apple Media; and Dr. Asa Andrew, CEO/host, “The Doctor Asa Show.”

sponsored by Newsmax, was introduced by TALKERS associate publisher and media attorney, Matthew B. Harrison, Esq. (pictured at right) and moderated by TALKERS publisher, Michael Harrison (pictured above). Panelists (pictured below from left to right) include Lee Harris, director of integrated operations, NewsNation; Lee Habeeb, host/producer, “Our American Stories”; Kraig Kitchin, CEO, Sound Mind, LLC/chair, Radio Hall of Fame; Arthur Aidala, Esq., founding partner, Aidala, Bertuna & Kamins, PC/host, AM 970 The Answer, New York; Chad Lopez, president, WABC, New York/Big Apple Media; and Dr. Asa Andrew, CEO/host, “The Doctor Asa Show.”